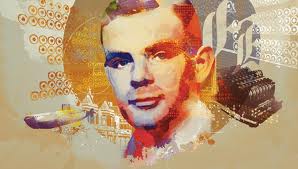

Computers will often solve systems of linear equations using the LU Decomposition. In fact, the LU Decomposition was introduced by the famous Computational Scientist, Alan Turing. In 1948, Turing published a remarkable paper on this topic called “Rounding Off Errors in Matrix Processes” (Quarterly Journal of Mechanical and Applied Math, pp. 287-308).

In his paper, Turing formulated Gaussian Elimination as the Matrix LU Factorization and introduced the Condition Number of a Matrix which are now both fundamental notions in the modern area of Numerical Analysis.

During the 1940’s there were other great thinkers working on the problem of Gaussian Elimination such as Von Neumann, Herman Goldstien, and Harold Hotelling. These thinkers worked on Gaussian Elimiation as a method to solve systems of linear equations in modern “automatic computers”. At the time it was uncertain as to whether Gaussian Elimination was appropriate.

Turing formulated the LU Factorization of a Matrix, showing that Gaussian Elimination computes an LU Factorization. He introduced the term “Condition Number” and defined two matrix condition numbers. He also exploited backward error ideas and finnally and most importantly, he analyzed Gaussian Elimination with partial pivotings for general matrices and obtained a bound for the error (James Wilkinson).

But what is LU Factorization exactly? Many key ideas of linear algebra are really factorizations of a matrix; the original matrix A becomes the product of two or three special matrices. In this case, we will be transforming the system Ax=b to an equivalent system Ux=y whose coefficient matrix is upper triangular. The system Ux=y can then be solved easily by back substitution if U is nonsingular. If two systems are equivalent then they have the same solution.

We will transform the system by a means of elementary operations of three types::

1. Add a multiple of one equation to another equation.

2. Interchange two equations.

3. Multiply an equation by a non zero constant.

Proposition If A^x = b^is obtained from Ax=b by an elementary operation of type 1,2, or3., then the systems Ax=b and A^x=b^ are equivalent.

If we were to prove this proposition we may think to show that all of the solutions of A^x=b^ are the same. We can do this by using 1., or we can use (2) if we recognize that Ax=b can be recovered from A^x=b^ by operation of type 1: If A^x=b^ was obtained from Ax=b by adding m times the jth row to the ith row, then Ax=b can be recovered fro A^x=b^ by adding -m times the jth row to the ith row.

It is convenient to represent the system Ax=b by an augmented matrix. An Augmented Matrix is a matrix obtained by appending the columns of two given matrices ususally for the purpose of performing elementary row operations on each of the given matrices.

For example, given the matrices A and B where

the augmented matrix (A|B) can be given

![<br /><br /><br /><br />

(A|B)=<br /><br /><br /><br />

\left[\begin{array}{ccc|c}<br /><br /><br /><br />

1 & 3 & 2 & 4 \\<br /><br /><br /><br />

2 & 0 & 1 & 3 \\<br /><br /><br /><br />

5 & 2 & 2 & 1<br /><br /><br /><br />

\end{array}\right].<br /><br /><br /><br />](https://i0.wp.com/upload.wikimedia.org/math/9/0/b/90bb313a8261f5819bbd627527a4fe68.png)

This is useful when solving systems of linear equations.

In our example, it is convenient to represent the system Ax=b by an augmented matrix (A|b). Each equation in the system Ax=b corresponds to a row of the matrix (A|b). The elementary operations on the equations amount to the following elementary row operations on (A|b):

1. Add a multiple of one row to anther row.

2. Interchange two rows.

3. Multiply a row by a nonzero constant.

You can also apply elementary row operations to A alone leaving off the vector b.

The factors L and U are triangular matrices. The factorization that comes from elimination is A=LU.

U is the upper triangular matrix with pivots on its diagonal.